“GPUs are melting” — AI boom and infrastructure warning

In March 2025, OpenAI CEO Sam Altman (Sam Altman) left the following message on X (formerly Twitter):

“It's super fun seeing people love images in ChatGPT, but our GPUs are melting.”

It was a humorous description of the time when the Ghibli style image generation function received an explosive response and the situation where the server was overloaded by a surge in requests.

However, this statement wasn't just a joke. OpenAI actually had to introduce temporary usage restrictions to prevent GPU overload, which led to actual usage restrictions for users around the world.

This case clearly illustrates the new reality of AI infrastructure operations. GPUs, which are a core asset in the AI era, are no longer limited to “high-performance computing equipment.” It is now a strategic infrastructure that determines business continuity and operational efficiency. Also, properly “seeing” this GPU, or monitoring, has become an essential task for survival.

Why is GPU monitoring necessary now?

Having a large number of GPUs is not enough. What is needed now is the ability to quantitatively determine and manage “how well the GPU is being used.” Here are 7 real reasons why GPU monitoring goes beyond simply monitoring equipment.

1. GPUs are expensive assets, losses without monitoring

GPUs are one of the most expensive components of AI infrastructure. However, in actual production sites, GPUs are frequently overallocated or left idle.

Failure to track metrics such as utilization, latency, and process share is bound to accumulate waste and worsen return on investment (ROI).

2. GPUs have a shorter lifespan than CPUs and are more likely to fail

GPUs operate in environments with extreme computational load and heat generation. Elevated temperatures, power overloads, and memory leaks are very likely to lead to equipment failure without warning.

By tracking temperature, power, and memory usage patterns in real time, early signs of abnormalities can be detected and failures can be prevented in advance.

3. AI workloads can consume GPUs inefficiently

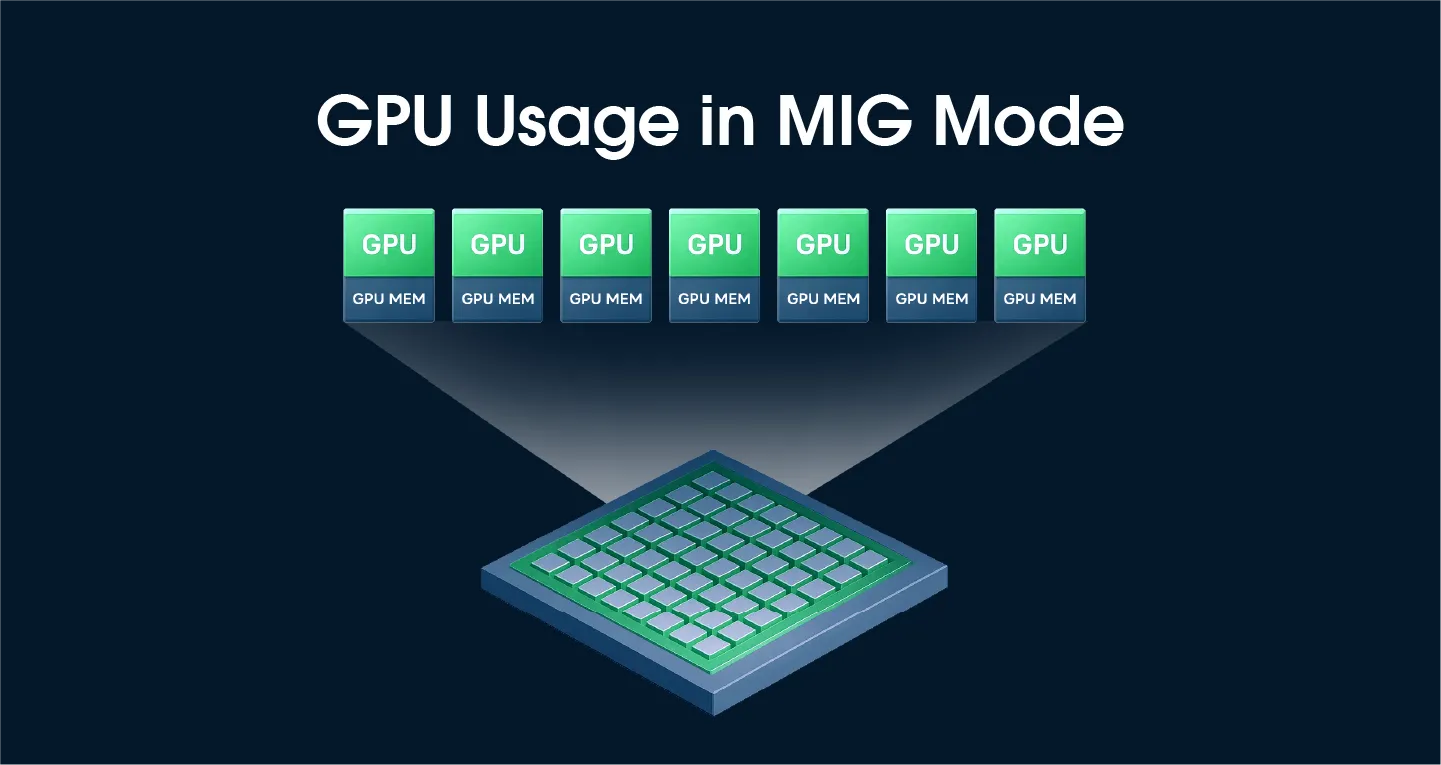

AI model training (Job) and inference (Inference) tasks consume different GPU resources such as core, memory, and bandwidth. However, in production environments, static allocation of jobs often wastes resources and causes bottlenecks.

Designing a resource allocation strategy that matches the characteristics of AI workloads through GPU-level granular metrics can improve speed and reduce operating costs.

4. GPU visibility is essential for Kubernetes (Kubernetes) -based AI operations

Many companies run AI infrastructure on Kubernetes clusters, but since basic monitoring tools are CPU/memory-intensive, information on GPU metrics is very limited.

If you don't know how GPUs are deployed on which nodes and how they are being used on a per-pod basis, it's difficult to respond quickly when resource bottlenecks and failures occur.

Watap GPU monitoring visualizes GPU status in real time through a dashboard optimized for K8s environments.

5. More than just monitoring, the starting point for strategic infrastructure operations

More than simply checking equipment health, GPU monitoring is a strategic tool for maximizing resource utilization and building a predictable infrastructure operating system. Accumulated GPU data is a key basis for predicting growth timing, establishing automatic scaling policies, and optimizing operating costs, which is the starting point for FinOps and AIOps strategies.

In particular, capacity planning (capacity planning) based on GPU monitoring is a key means of securing both stability and scalability while preventing unnecessary overprovisioning.

6. GPU failure is a business failure

Many AI-based services already use GPUs as core computing resources, such as image generation, speech analysis, and real-time translation. At this time, GPU failure is not simply a decrease in speed; it can directly lead to unresponsiveness of AI functions, poor quality, and even total service interruption.

In particular, in B2B service environments where service level agreements (SLAs) are important, failure to detect GPU anomalies in real time can result in poor availability, customer churn, and even financial losses due to SLA violations.

For example, according to a Gartner report, the average loss due to IT infrastructure failure is $5,600 per minute (approximately 7.5 million won in Korean dollars), and between 33 million and 40 million won per hour. GPU failures are also included in this category.

7. GPU monitoring is a key pillar of observability

Observability (Observability) refers to the ability to go beyond simply checking indicators, collect the state of the entire system in multiple dimensions, and analyze and predict by linking cause and effect. Specifically, in AI infrastructure, GPUs are a single resource and a complex resource that can be a major cause of poor performance, bottlenecks, and resource mismatches.

For example, GPU memory bottlenecks slow down inference speed, which can cascade into increased API response times and poor user UX. Without linking GPU status to other metrics like this, it's difficult to determine the root cause of the problem.

GPU monitoring is the starting point and a key component of an observability scheme. To secure the actual stability and scalability of AI/ML infrastructure, an end-to-end monitoring system including GPUs is essential.

Bottom line: GPU monitoring is no longer an option

In the AI era, GPUs are a key driving force for corporate growth, and at the same time, they are a risk factor that causes huge costs if not managed properly. GPU monitoring is more than just checking resource usage. It plays an essential role in improving the overall efficiency of AI infrastructure operations, including reducing costs, preventing failures, and establishing infrastructure strategies.

GPU monitoring is now not an option; it's a prerequisite for sustainable operations. Now is the time to check the GPU and operate it systematically. Operate without wasting GPU resources through a real-time monitoring system.

.svg)