Recently, many web services are using HTTP/2. The WhaTap monitoring SaaS service is also serviced using the HTTP/2 protocol through ALB on AWS. HTTP/2 has better performance than HTTP/1.x. HTTP/2 retains all the core concepts of the existing HTTP/1.x protocol while showing significant performance improvements. Therefore, if your web service is currently communicating with HTTP/1.1, you can see significant performance improvements by simply adding the options and features required for HTTP/2. Let's briefly introduce the core of HTTP/2 and apply HTTP/2 communication with Tomcat.

HTTP/1.x

The biggest change between HTTP/2 (hereafter H2) and HTTP/1.x (hereafter H1) is speed. It's easy to think of H2 as a refinement of H1's performance issues and inefficiencies. Let's take a look at what was broken in H1 and how we fixed it in H2.

HTTP/1.1

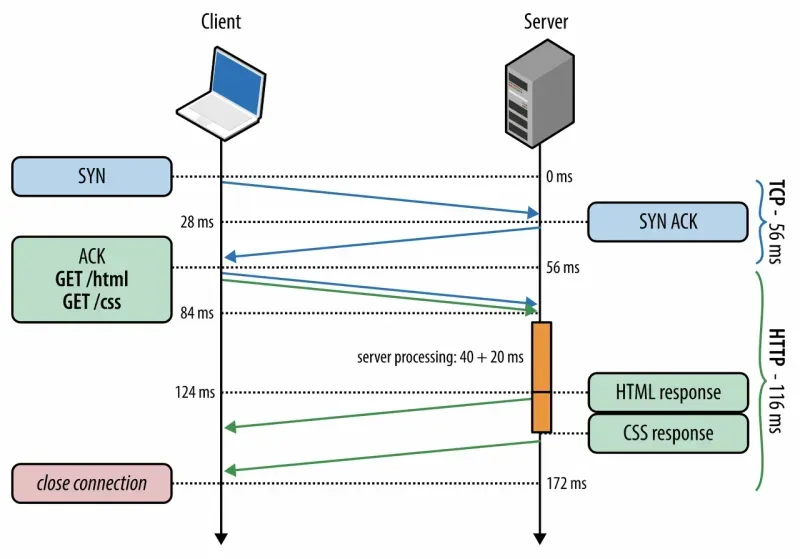

HTTP is a protocol that works on top of TCP connections. To ensure reliability, handshakes are used to make and break connections. In addition, HTTP is a connectionless protocol, meaning that it makes a single request and response over a single connection, and then disconnects after the response. This introduces overhead in a connectionless protocol, especially since web services have evolved to be hypermedia with lots of static data rather than hypertext, so the Keep-alive feature was added in HTTP/1.1 to help improve performance by reducing unnecessary handshakes by keeping a connection alive instead of breaking it. In this way, as web pages became more media-rich and less text-oriented and required techniques to maintain state (cookies, sessions, etc.), it became imperative to improve performance, so additional features were added, leading to H2.

In HTTP/1.1, a technique called pipelining was introduced to improve performance. It is a way to reduce latency by making multiple sequential requests on a single connection at once and receiving responses in that order. Because you have to request and receive data sequentially, if the first request is not finished, the request behind it has to wait for the first request to finish, no matter how fast it finishes. This is called the HOL (Head Of Line) Blocking problem in HTTP, and it is a big problem with pipelining. So most modern browsers have disabled pipelining, so when communicating with H1, the client (browser) uses 6-8 (depending on the browser) connections to get data in order to parallelize requests, which improves performance.

HTTP/1.1 Pipelining

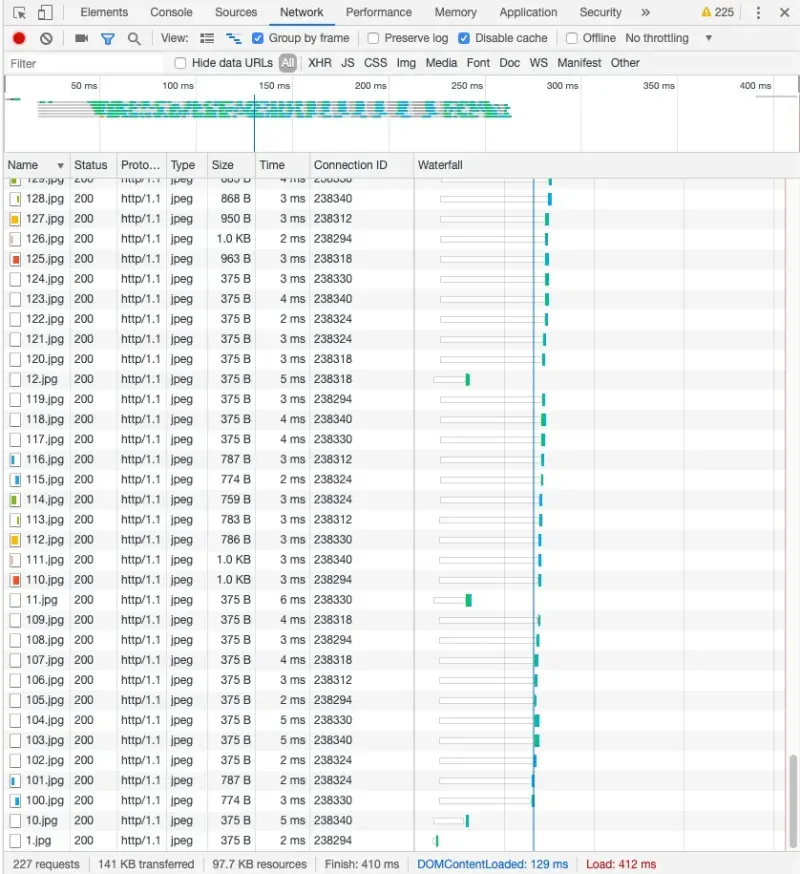

Six connection IDs were used in the HTTP/1.1 data request response, as verified by Chrome Developer Tools.

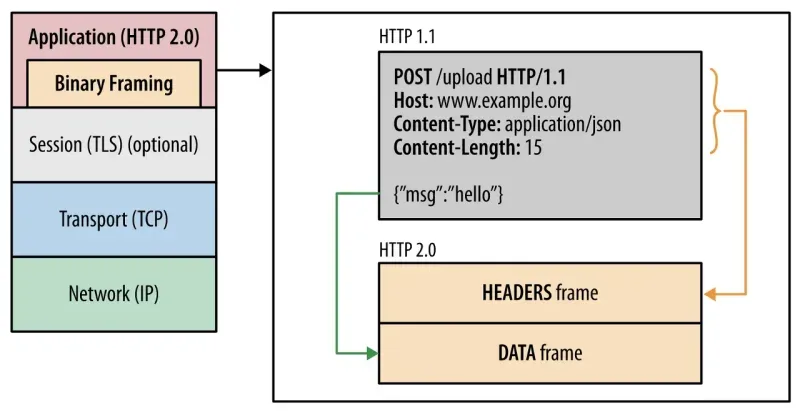

Binary framing and multiplexing in HTTP/2

At the core of H2 is the use of a binary framing layer to support multiplexing of requests and responses. It breaks HTTP messages into frames in binary form, which are sent and reassembled on the receiving end. Requests and responses happen simultaneously, so there are multiple requests and responses in a single connection. The framing is done by the server and the client (browser), so you do not have to consider any major changes. Binary framing and multiplexing allows for parallel processing without multiple connections, and unlike pipelining, it solves the HOL problem.

In addition to that, we have added server push features that allow you to prioritize requests, compress headers to reduce header overhead, and proactively push needed resources without the client having to explicitly request them, reducing response time. You can read more about H2's evolution, goals, and technical details on the Google developer page.

Binary Framing Layer Source: Google

Terminology Explanation Stream: A bidirectional flow of bytes passed within a configured connection, which may carry one or more messages. Message: A complete sequence of frames that maps to a logical request or response message. Frame: The smallest unit of communication in HTTP/2, and each smallest unit contains one frame header. This frame header identifies, at a minimum, the stream to which the frame belongs.

Now let's try setting up H2 with a real Tomcat and create a simple WAS to compare page load times for HTTP/1.1 and HTTP/2.

Setting up H2 with Tomcat for a simple comparison with H1

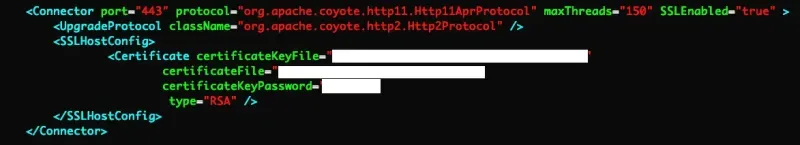

Tomcat supports H2 starting with version 8.5 and later. However, 8.5 requires Tomcat Native (APR) to be installed for H2 to work. H2 also requires TLS to be enabled in order to communicate with H2. TLS enforcement is not a standard for H2, but most browsers will not communicate with H2 if TLS is not enabled.

I set up HTTPS and APR on Tomcat 8.5 and created a WAS to serve the WhaTap logo by dividing it into 225 images, and since the Tomcat server is installed on a local VM, I forced network throttling to set the latency to 30ms and turned off browser caching so that the performance difference is visible to the naked eye. All you need to do is install Tomcat Native on Tomcat for HTTP/2 and configure the connector as shown below. If the browser supports H2, it will communicate with H2, otherwise it will communicate with H1.

Setting up the Tomcat HTTP/2 Settings connector

In the image above, H2 shows a nearly 2x performance improvement under the same conditions. This performance difference is even more pronounced in bad latency environments. Also, the overall transferred data size is reduced, which reduces the network load. It is easy to improve overall performance once you have H2 set up for it.

In the next post, we will use the Tomcat WAS we created here to collect packets to learn a little more about how header frames and data frames are sent and received.

Reference

https://developers.google.com/web/fundamentals/performance/http2/

.svg)

.svg)