In the field of application performance, average response time refers to the time it takes for an application server to return the results of a user request. The response time of an application server is typically close to milliseconds, but it can vary significantly depending on the workload.

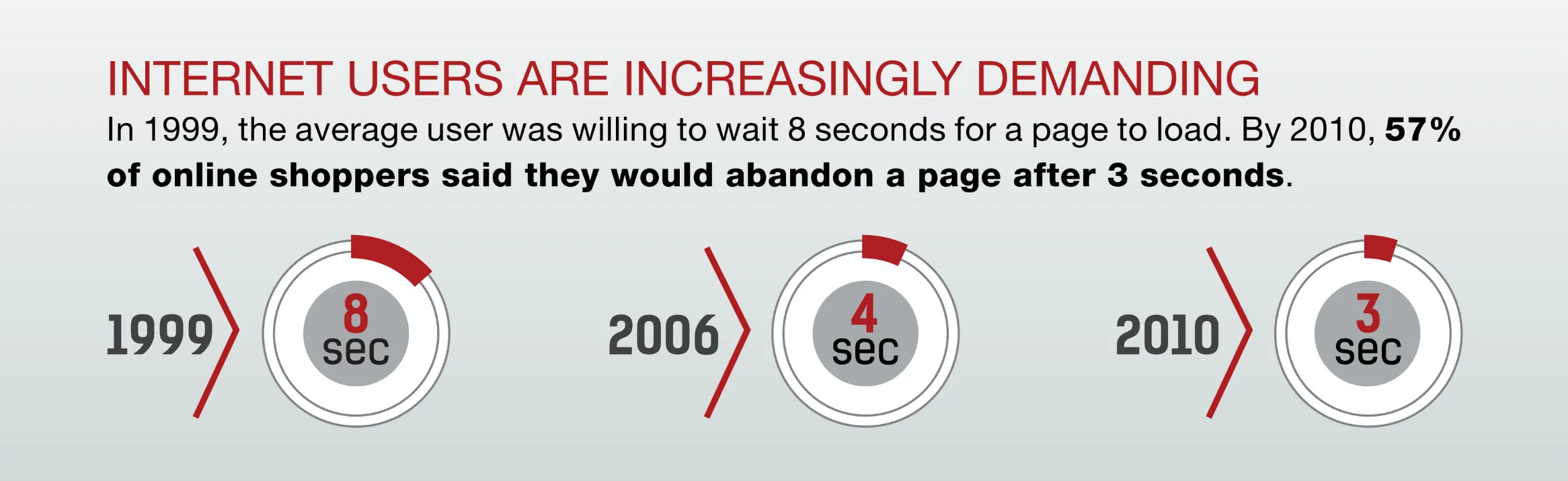

The waiting time for customers is 3 seconds

In the early days of the internet in 1999, the optimal load time for e-commerce sites was 8 seconds. By 2006, it had decreased to 4 seconds. Currently, the threshold for causing customers to leave is 3 seconds. According to a study by Google's AdSense, more than half of users abandon a service if a mobile page takes more than 3 seconds to load. The time frame of 3 seconds includes the rendering time of web pages and the time consumed by the network, making the actual time web applications should take close to milliseconds. However, with actual service disruptions, the average response time of web applications tends to increase.

For instance, in the code above, you might become curious about how the class named "Print" functions. In Eclipse, you can press F3 to examine the code, or in IntelliJ, you can use command+b (ctrl+b in the Windows version). If it's a class you've authored (in a .java file), it will directly navigate to the function's declaration. However, if it's not, the screen will display as follows.

Average Response Time in Performance Analysis

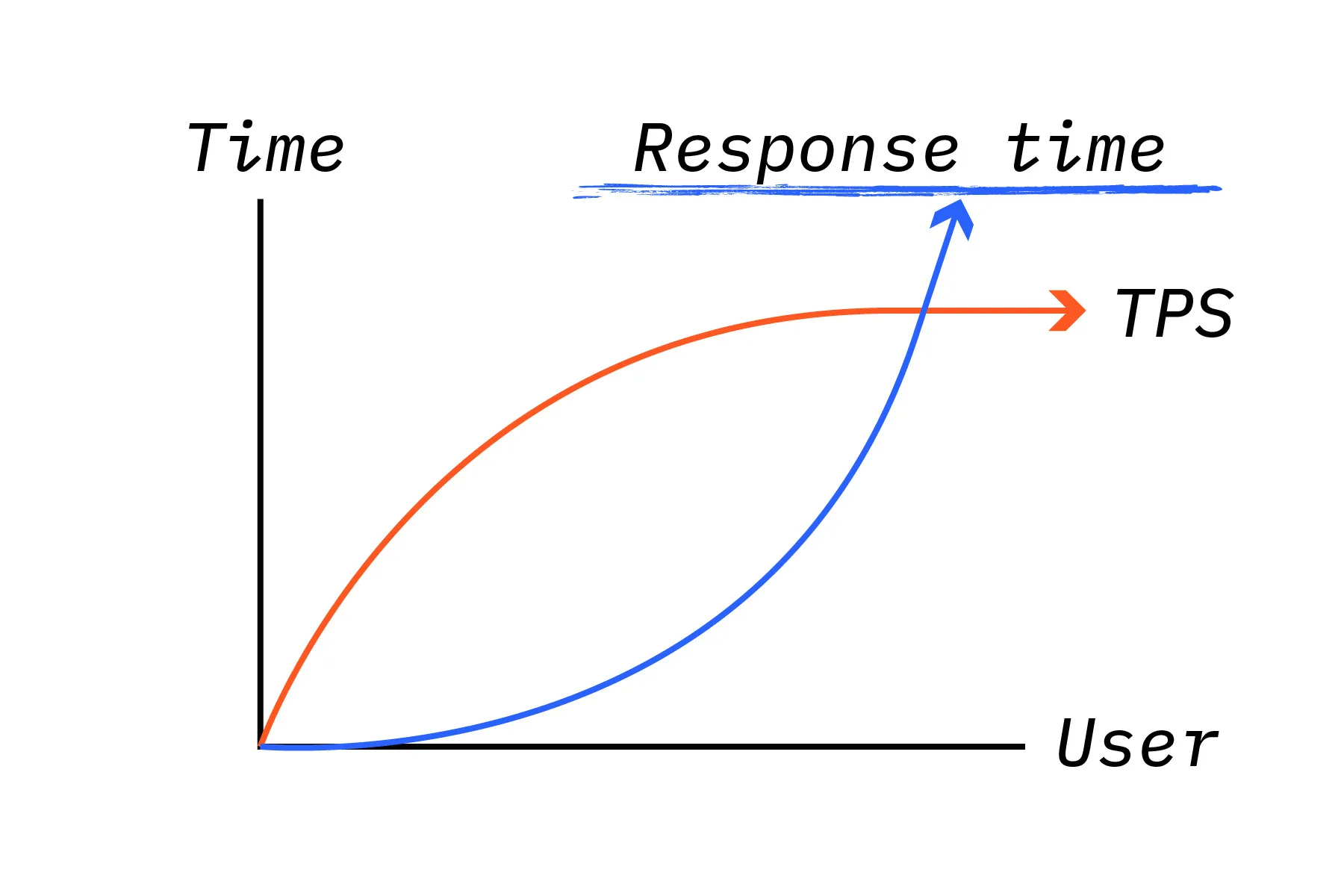

As the load increases, once the threshold is surpassed, the throughput per second no longer increases. Logically, when the throughput per second reaches a plateau and only the number of users continues to grow, the transactions per second (TPS) and response time behave like constants, resulting in response time increasing proportionally to the number of users.

[Response Time = [Concurrent Users / Transactions Per Second (TPS)] - Think Time]

However, in typical scenarios, while response time is measured in milliseconds, think time tends to have values ranging from 3 seconds to over 10 seconds. Now, let's create a performance analysis scenario. Suppose we are developing a web service for translating English sentences into Korean. We anticipate 100 concurrent users for our service. Given the nature of the service, it takes an average of 30 seconds for a user to request a translation and send the next request. Lastly, we aim to design the maximum response time not to exceed 0.5 seconds.

In such cases, the target transactions per second that we aim for can be calculated by dividing the number of concurrent users making requests to the service by the sum of the response time (0.5 seconds) and the think time (30 seconds). The formula is expressed as Concurrent Users (100) / (Response Time (0.5 seconds) + Think Time (30 seconds)), yielding an approximate result of 3.27.

Transactions Per Second (TPS) = Concurrent Users / [Response Time + Think Time]

In the process of calculating performance, the processing time of the service, i.e., response time, is significantly smaller compared to think time. Therefore, as think time increases, the proportion influencing TPS tends to converge towards zero.

In conclusion, at the point of designing performance, response time becomes less of a critical issue. Instead, think time becomes more crucial.

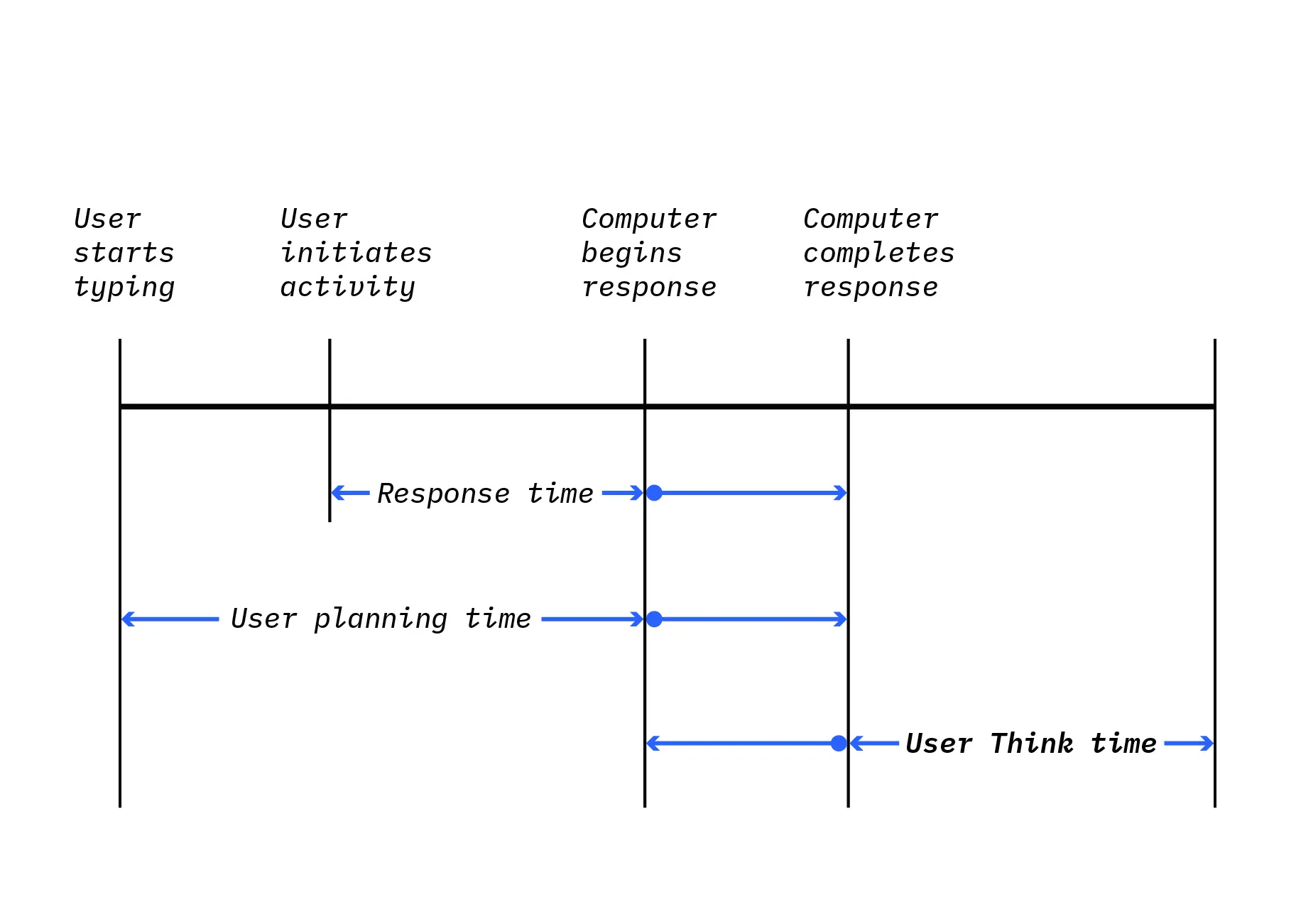

What is Think Time?

It is necessary to account for the time taken to acknowledge one's own request when using a web service. This interval between previous and subsequent requests is known as think time. Think time varies depending on the user or service type. For instance, system-to-system interactions entail very low think time compared to web service interactions involving human users. Alternatively, the think time for a pre-search service would be much shorter than that for a blog service. Analyzing the domain of the service to determine think time is crucial. By using think time, one can calculate not only the number of requests to be completed per minute but also the concurrent user capacity the system can support.

Average Response Time as a Tuning Metric

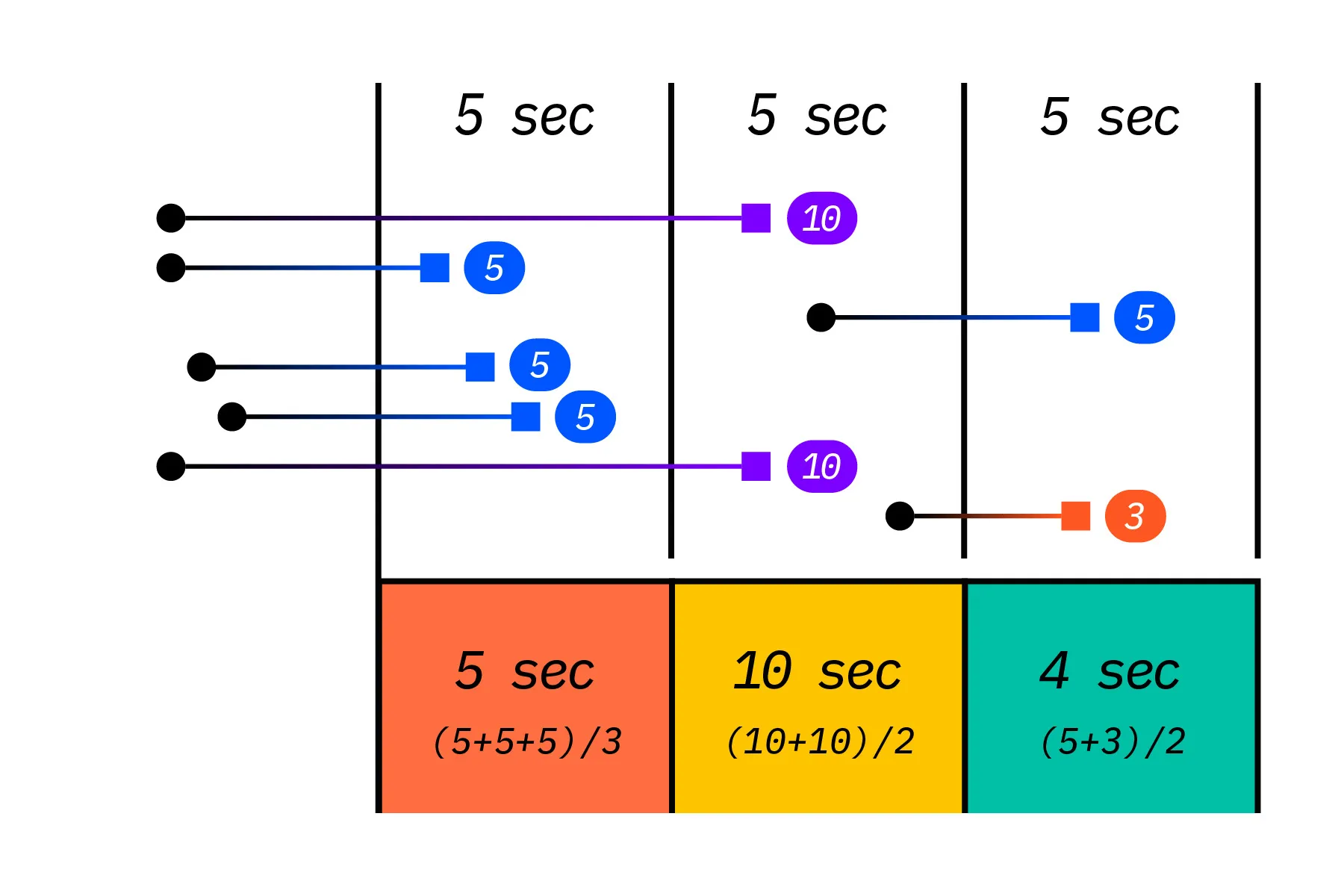

In reality, the response time of web services may manifest differently from mathematical expressions. Therefore, many performance analysis tools display average response time. The average response time provided by actual performance analysis tools is the sum of response times of transactions collected during the collection period, averaged.

WhaTap's service calculates average response time at 5-second intervals. Response time holds significance as a tuning metric more than a performance metric. For instance, during periods with fewer users, certain response times, such as those of batch jobs, may increase, leading to a longer average response time than busier periods during the day. However, response time is highly direct as a metric for improving actual performance. If there are factors causing an increase in average response time regardless of TPS, it is essential to examine the average response time in conjunction with surrounding factors.

.svg)

.svg)