Introduction

With the recent maturation of the MSA architecture, more and more people are looking at Kubernetes environments. As a result, many developers and engineers are taking a deeper dive into Kubernetes. In this article, we will discuss cloud vendor usage, autoscaling strategies, deployment strategies, and other things to consider when organizing your Kubernetes architecture.

Generating a Cluster with Amazon EKS

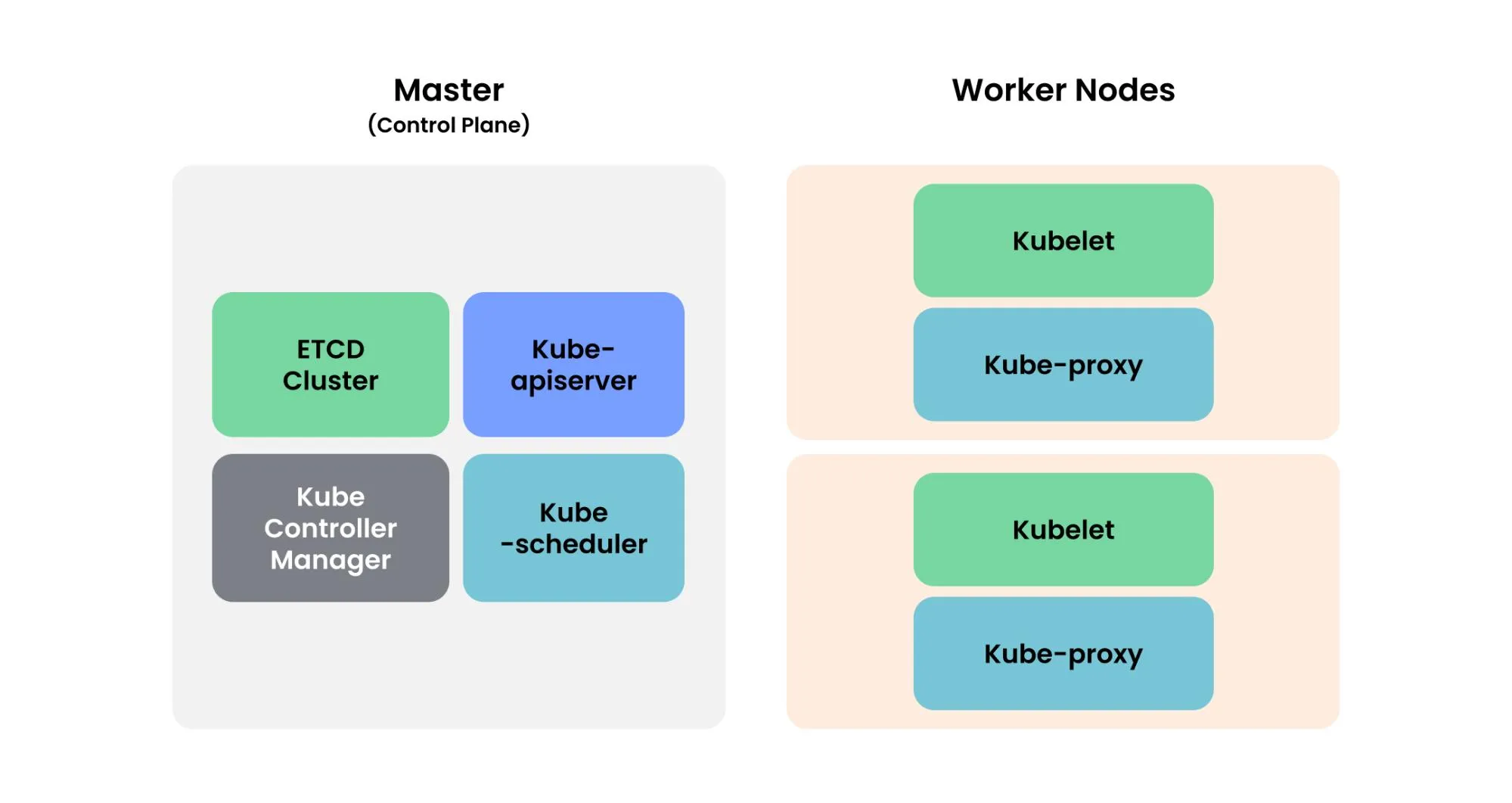

A typical Kubernetes structure consists of a Master and Woker Nodes, as shown in the figure below.

With Amazon EKS, the management of the master node is supported by AWS rather than by the operator, which means that even complex tasks such as updating the cluster can be done simply from the AWS Console. In addition, Amazon EKS's AutoScaler can dynamically adjust the number of Cluster Nodes using AWS's Auto Scaling Groups, and monitoring and logging can be done without any configuration. But it's not all good news.

Amazon EKS has a per-cluster management fee, so for smaller clusters or single instances, you may want to consider building your own with EC2 if it is less of an administrative burden, such as clustering directly to EC2.

https://aws.amazon.com/ko/eks/pricing/

AutoScailing

AutoScaling is essential for stable operation. In particular, Kubernetes requires AutoScaling for Pods and AutoScaling for Nodes to be applied simultaneously to ensure stable operation. Flexible AutoScaling can also lead to lower operating costs.

AutoScaling in Kubernetes

Pod AutoScaling - HPA

- By using the HPA Controller, it scales the number of Pods (Replicas) based on the value of a Pod's matrix.

Cluster AutoScailing

- There are two ways to do Cluster AutoScaling in Amazon EKS: using AWS AutoScailingGroup and using Karpenter. In this article, I will introduce the basic Cluster AutoScaling using AWS AutoScailingGroup.

- Cluster Auto Scaling - AWS Cluster Autoscaler

Adds nodes to an Auto Scaling Group when there are pods pending due to insufficient AWS Cluster Autoscaler resources.

AutoScailing Flow

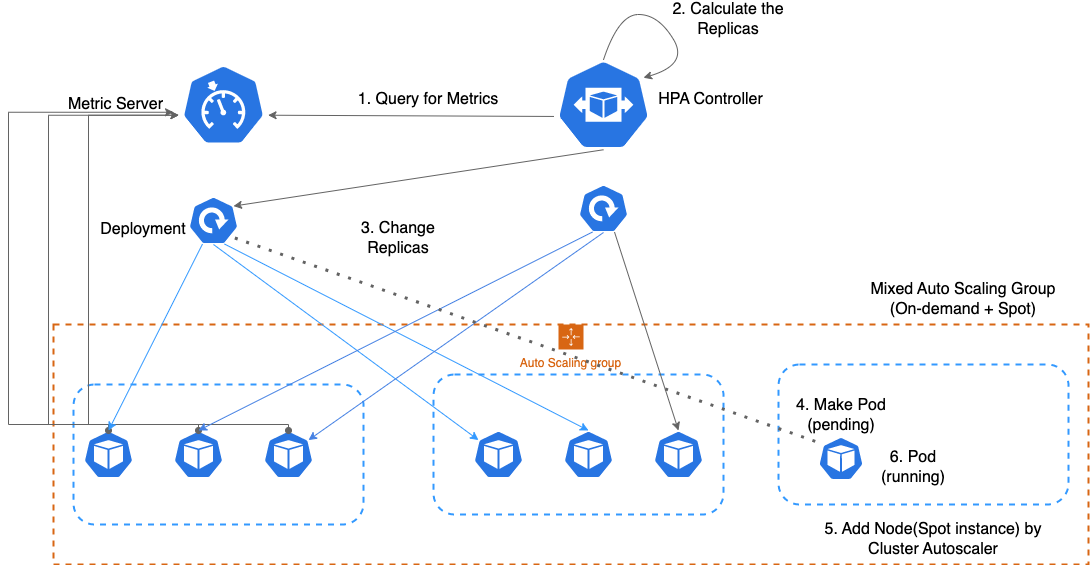

- The HPA Controller periodically blasts out queries to the Metric Server.

- If the CPU / Memory metric of a Pod exceeds a pre-committed number, it adjusts the Replicas.

- It updates Deployment with the number of Replicas, or Pods.

- Deployment creates Pods based on Replicas

If all nodes run out of resources, here → Pod pending

- If Cluster Autoscaler has a Pod pending, it will add the Node to ACS and assign the

- Assign the Pod to that Node.

- Pod status : pending → running

AutoScaling Strategy: Optimize Costs with Spot+Ondemand Instances

Source: https://awskrug.github.io/eks-workshop/spotworkers/

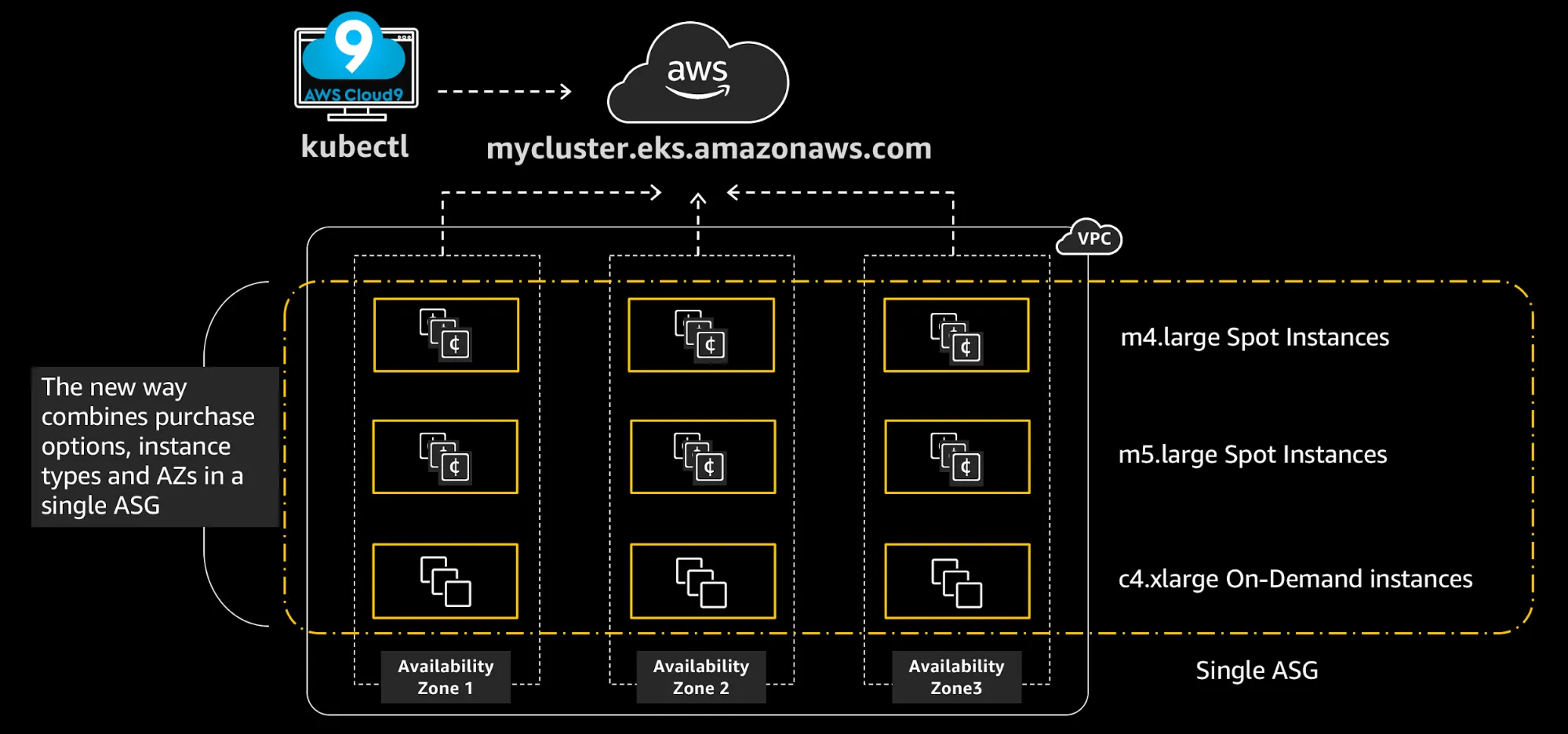

The basic strategy is as follows

- Minimize the number of Ondemand NodeGroups for stable service

- Use Spot NodeGroup to prepare for fluctuating traffic increase/decrease → Use Spot Node when Ondemand Node's resources are exhausted

By utilizing Taint, you can force Pods to be assigned to a Spot only when they cannot be assigned to Ondemand.

- Setting the Taint of a Spot NodeGroup to PreferNoSchedule

The biggest concern with using Spot is that a Spot Instance can be terminated at any time. To prevent this from happening, you need to take the following actions.

- Check for termination

- If it's about to shut down, use Taint to prevent new Pods from being assigned to that Node

- Drain the Pods on that Node

- Assign Pods to the remaining Nodes

What makes this possible is the AWS Node Termination Handler.

If you use the IDMS method, it will be installed as a DaemonSet and installed on all Nodes, monitoring the metadata of the instance and then performing the previously described process when it is due for termination. (Applies only to Spot Nodes using Label)

Deployment

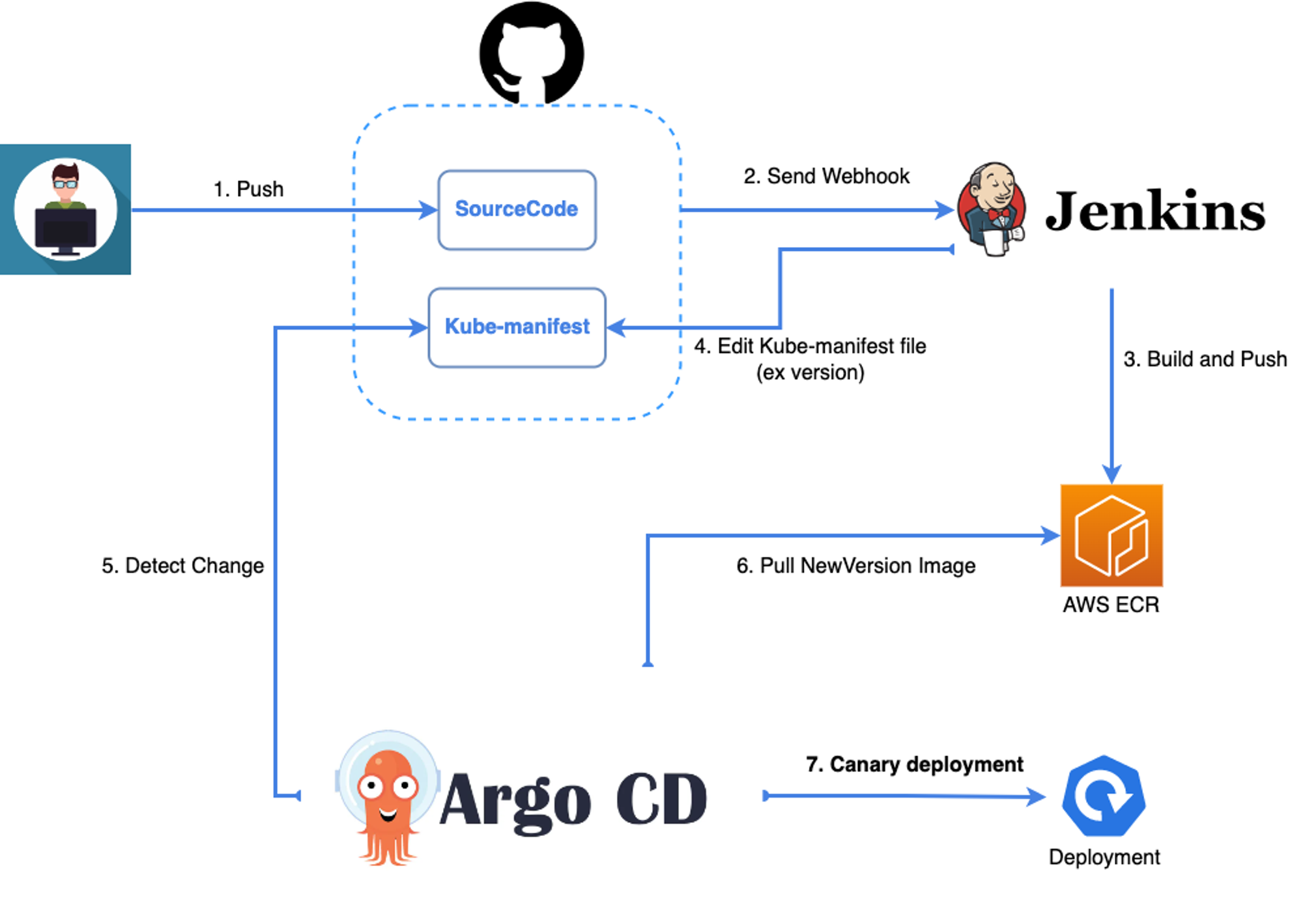

Once you have created a cluster on EKS, created a NodeGroup and applied the AutoScaling strategy, you are done with the basic architecture. You can now configure the following architecture to build/deploy your application on Kubernetes.

- Github : Saves SourceCode, Kubernetes manifestfile, and sends webhook to Jenkins when SourceCode changes.

- Jenkins : Gets the changed code and build the image → Pushes to AWS ECR, modifies the version in the Kube-manifest on Github.

- Argo CD : Detects changes in Kube-manifest (Sync) → Canary deploy

- Canary Deploy: Deploys only a small amount of Pods to increase availability, then deploys incrementally.

Closing thoughts

I know that many people are hesitant to adopt Kubernetes environments into production because of the learning curve. But as the comedian Myung Soo Park says, "It is better late than never," and I think it is time to jump on the Kubernetes bandwagon before it is too late.

.svg)

.svg)